OpenAI has officially launched o3-pro, the latest and most advanced model in its o-series lineup. Earlier iterations of this model family have consistently delivered strong results across standard AI benchmarks — especially in math, programming, and scientific tasks — and o3-pro builds on those strengths.

The release notes for OpenAI’s o3-pro read, in part: “Like o1-pro, o3-pro is a version of our most intelligent model, o3, designed to think longer and provide the most reliable responses. Since the launch of o1-pro, users have favored this model for domains such as math, science, and coding—areas where o3-pro continues to excel, as shown in academic evaluations.”

The o3-pro model is currently available for Pro and Team users in ChatGPT and in its API, with availability for Edu and Enterprise accounts expected next week, following a rollout schedule similar to previous models.

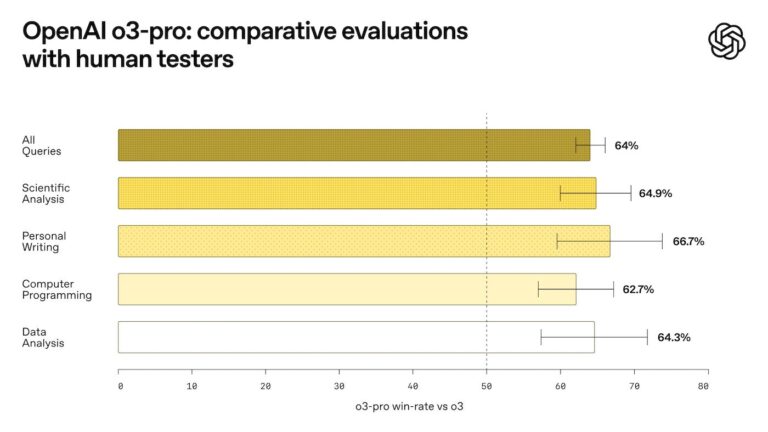

Comparative evaluations

Prior to publishing benchmark data, OpenAI gave human testers the opportunity to try out o3-pro and compare it against the results of o3. The majority of these human testers preferred o3-pro over o3 in key areas, including:

- All queries (64%)

- Scientific analysis (64.9%)

- Personal writing (66.7%)

- Computer programming (62.7%)

- Data analysis (64.3%)

Pass@1 accuracy and efficiency benchmarks

Frequently used to measure the efficiency of modern AI models, a pass@1 benchmark highlights the model’s ability to generate an accurate response on the first attempt. Unsurprisingly, the o3-pro outperforms the o3 and the o1-pro on various benchmarks.

| Competitive mathematics (AIME 2024) | PhD-level science (GPQA Diamond) | Competitive coding (Codeforces) | |

|---|---|---|---|

| o3-pro | 93% | 84% | 2748 |

| o3 | 90% | 81% | 2517 |

| o1-pro | 86% | 79% | 1707 |

4/4 reliability benchmarks

The team at OpenAI subjected their AI models to a series of 4/4 reliability benchmarks. In these evaluations, an AI model can only be successful if it provides a correct response in four out of four attempts. Any failed attempts result in an automatic failure of the 4/4 reliability benchmarks.

| Competitive mathematics (AIME 2024) | PhD-level science (GPQA Diamond) | Competitive coding (Codeforces) | |

|---|---|---|---|

| o3-pro | 90% | 76% | 2301 |

| o3 | 80% | 67% | 2011 |

| o1-pro | 80% | 74% | 1423 |

Limitations of o3-pro

The limitations of o3-pro to consider include:

- At the time of this writing, temporary chats in o3-pro are currently disabled while the OpenAI team addresses a technical issue.

- o3-pro does not support image generation. Users who need image generation functionality are urged to use GPT-4o, OpenAI o3, or OpenAI o4-mini.

- o3-pro does not support OpenAI’s Canvas interface. It’s unclear if support will be added at a later date.>

Weighing the pros and cons of o3-pro

Although OpenAI admits that o3-pro performs slower than o1-pro in some instances, it’s a result of the additional features in the latest version. As TechnologyAdvice Managing Editor Corey Noles writes in his user guide on TechRepublic sister site The Neuron, “o3‑Pro isn’t your everyday chat buddy—it’s the brainiac you summon when accuracy trumps speed.”

With the ability to search the internet in real time, perform complex data analysis, provide reasoning based on visual prompts, and more, o3-pro is the clear winner when it comes to overall functionality.

Read our coverage of superintelligence predictions by OpenAI CEO Sam Altman.