During this month’s Pure Accelerate 2025 conference in Las Vegas, Par Botes, vice president of AI Infrastructure at Pure Storage, provided an overview of some of the latest breakthroughs in AI, particularly those relevant to enterprise users.

Significant improvements in smaller LLMs

Botes noted that smaller large language models (LLMs) have recently shown significant improvements, resulting in a massive reduction in the number of parameters being analyzed, yet LLM accuracy remains high.

“AI is becoming less complex with smaller models that are just as capable as the very large ones,” said Botes. “This is due to improvements in training data refinement, model architectures, and the algorithms used in training pipelines.”

AI is more affordable

In parallel, AI is becoming increasingly affordable. Benchmarks of various AI services show that some models have reduced user costs by as much as 100 times over the past two years for inference workloads. As a result, AI investment is surging. The AI Index 2025 Annual Report by Stanford University found that AI investment surged from more than $10 billion in 2023 — which was more than any other investment category that year — to $30 billion in 2024.

Innovation is on the rise, and slow storage has no place in AI systems

These gains are driving innovation in the enterprise. With AI inferencing now able to happen rapidly, the rest of the IT stack is undergoing a series of breakthroughs in an effort to keep pace with AI. According to Botes, Pure Storage FlashBlade//EXA can deliver storage data to AI engines at speeds exceeding 10 TB/second.

“We now have affordable access to advanced AI and infrastructure,” said Botes. “The barrier of entry for high-performance AI dramatically lowered in 2025.”

He argued that slow storage has no place in AI systems due to the pace of computation; it takes high-performance, scalable storage to enable end-to-end AI/ML workflows. Hence, he doesn’t believe that traditional storage architectures built on data silos and disks will remain viable for long.

“The cost of GPUs is so high that you are wasting money if you can’t support them with flash storage,” said Botes. “All storage research dollars are going into flash, whereas disk research is at a standstill.”

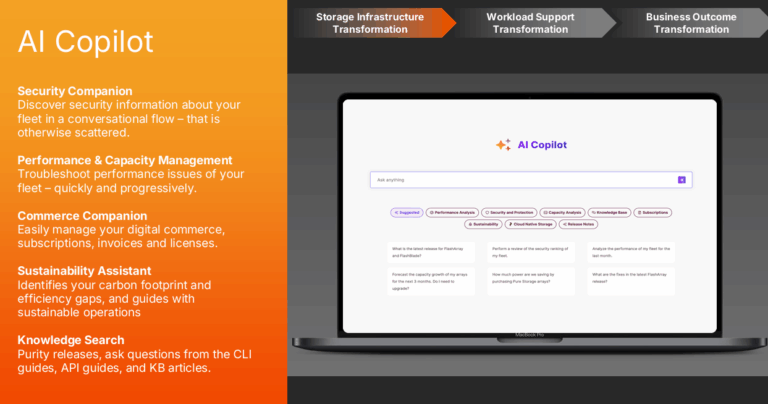

AI Copilot for Pure Storage arrays

In addition, Pure Storage announced the general availability of its own AI Copilot inside its storage arrays. This always-on assistant delivers personalized, fleet-aware insights with agents available for topics including security information, performance issues, digital commerce, sustainable operations, and support center.

Botes explained that the system comprises two agents: one focused on storage performance and one on security and compliance. Pure Storage arrays report back to the company every 30 seconds; this data is used to detect problems, create service tickets, note trends, address queries, and figure out how to rearrange workloads.

“You can ask AI Copilot what systems have the highest load, where there might be security threats, how to solve performance or security issues, and what the result would be of moving a workload from A to B,” said Botes. “Some customers use AI Copilot to give them a summary each morning of everything that happened with storage since they left the office the previous night.”

AI deployment challenges and tips

Botes concluded with a summary of AI deployment challenges and some tips on how to achieve AI success.

- Design: Think beyond the chat bot. Refine for the target user and focus on the product.

- Evaluation: Regularly evaluate AI performance and find ways to improve it.

- Data silos: Bringing relevant data together is a common pain point.

- Talent: Talent remains a limiting factor as modern AI skills are not mainstream.

- Deployment: Evaluate constantly and collect feedback.

- Scale: Start small, simple, and be user focused.

- Optimize: Fine-tune models to drive efficiency.

“Find partners who can accompany you on your AI journey and simplify deployments,” said Botes.