California hosts the headquarters of many tech giants and could potentially set a precedent for AI regulation.

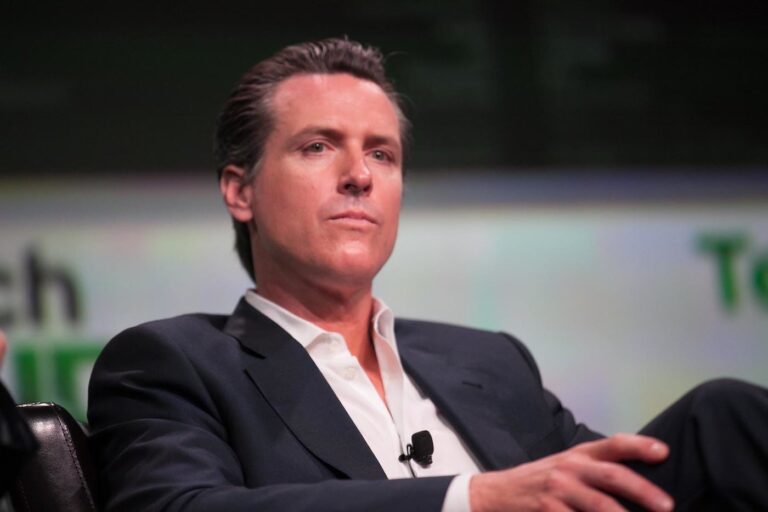

The California Report on Frontier AI Policy report published on Tuesday outlines new plans for AI guardrails that could be implemented as state law. It was drawn up by experts after California Governor Gavin Newsom vetoed the controversial AI regulation bill SB 1047 — the Safe and Secure Innovation for Frontier Artificial Intelligence Models Act — in September 2024.

Since March, when an initial draft of the report was published, there has been more evidence that AI models contribute to “chemical, biological, radiological, and nuclear (CBRN) weapons risks,” with developers reporting “capability jumps” in these areas, the authors wrote. They said AI could cause “irreversible harm” if appropriate safeguards are not implemented.

The authors also emphasised that regulations must be “commensurate with the associated risk” and “not so burdensome as to curb innovation.”

Report’s authors disparage the ‘big, beautiful bill’ that would ban state-level AI laws

The report comes as a Republican-backed budget bill makes its way through Congress that would bar US states and localities from regulating AI for the next 10 years. Its sponsors argue that a unified national framework would be better than a fragmented patchwork of state laws. Critics note, however, that eliminating state compliance measures designed to prevent bias and protect AI consumers would also attract financially lucrative tech companies that want to avoid regulatory delays.

So far, California has largely refrained from implementing broad or specific AI regulations, opting instead for voluntary agreements.

“Carefully targeted policy in California can both recognize the importance of aligning standards across jurisdictions to reduce compliance burdens on developers and avoid a patchwork approach while fulfilling states’ fundamental obligation to their citizens to keep them safe,” the authors wrote.

Third-party risk evaluations are key to regulating AI models, the researchers say

The crux of Newsom’s problem with SB 1047 was that it simply targeted all large models, regardless of risk profile, instead of focusing on high-risk models of all sizes. The report’s authors agreed, saying that other factors must be taken into account when categorising models for regulation besides size, such as “evaluations of risk” and their “downstream footprint.”

Mandating third-party risk evaluations is especially important because AI developers do not always voluntarily share information about “data acquisition, safety and security practices, pre-deployment testing, and downstream impacts.” Many operate as black boxes. The CEO of Anthropic has even admitted his company doesn’t fully understand how its AI system works, raising doubts about whether tech executives can accurately assess the risks involved.

External evaluations, often conducted by groups with greater demographic diversity than California’s tech companies, allow for broader representation of communities most vulnerable to AI bias, the authors noted. Making such audits mandatory could also increase transparency across the board; companies will be incentivised to improve their safety and avoid scrutiny, which would also work to decrease their liability exposure.

Nevertheless, carrying out such evaluations would require access to company data that developers may be reluctant to share. In its 2024 evaluation, METR, an independent AI safety research firm, said it was only provided “limited access” to information about OpenAI’s o3 model, making it difficult to fully interpret the results and assess potential safety risks.

How the report links to SB 1047

After vetoing SB 1047 to appease his pro-safety critics, Newsom outlined several new initiatives related to generative AI. One of these was that the state would convene a group of AI experts and academics, including Stanford University professor and AI “grandmother” Fei-Fei Li, to “help California develop workable guardrails.” The plan for these guardrails is outlined in the report.

SB 1047 would have been the strongest regulation in the country regarding generative AI. It aimed to prevent AI models from causing large-scale damage to humanity, such as through nuclear war or bioweapons, or financial losses by placing strict safety requirements on developers.

These requirements offered protections for industry whistleblowers, mandated that large AI developers be able to fully shut down their models, and held major AI companies accountable for strict safety and security protocols. The report says that the whistleblower protections should remain, but does not mention a kill switch.

Much of Silicon Valley — including OpenAI, Meta, Google, and Hugging Face — publicly disparaged SB 1047, calling it a threat to innovation and criticising its “technically infeasible requirements.” The bill did gain support from Elon Musk at the time, while two former OpenAI employees also wrote a letter to Newsom criticising their former employer for opposing SB 1047.

Read TechRepublic’s coverage of California’s AI regulation efforts — from the proposed SB 1047 bill to Governor Newsom’s veto — to understand the evolving legal landscape.